A standard approach for approximating solutions to overdetermined systems by finding model parameters that minimize the sum of the squared differences between observed and predicted values. This sum is known as the sum of squared residuals (SSR). The goal is to find the parameters \(\hat{\beta}\) that minimize the function \(S(\beta) = \sum_{i=1}^{n} (y_i – x_i^T \beta)^2\).

Method of Ordinary Least Squares (OLS)

- Adrien-Marie Legendre

- Carl Friedrich Gauss

The method of ordinary least squares is a cornerstone of regression analysis. It provides a direct way to estimate the unknown parameters in a linear model. The principle is to find the line (or hyperplane in multiple regression) that is closest to all the data points simultaneously. ‘Closest’ is defined in terms of minimizing the vertical distances from each point to the line, specifically, the sum of the squares of these distances (residuals).

This minimization problem can be solved using calculus. By taking the derivative of the sum of squared residuals function \(S(\beta)\) with respect to the parameter vector \(\beta\) and setting it to zero, we derive a set of equations known as the ‘normal equations’. In matrix form, these are expressed as \(X^T X \hat{\beta} = X^T y\), where \(X\) is the matrix of independent variables and \(y\) is the vector of the dependent variable.

The solution for the estimated coefficient vector is then given by \(\hat{\beta} = (X^T X)^{-1} X^T y\). This closed-form solution is computationally efficient and provides a unique estimate, provided that the matrix \(X^T X\) is invertible (i.e., there is no perfect multicollinearity among the independent variables). Geometrically, the OLS solution corresponds to an orthogonal projection of the outcome vector \(y\) onto the vector subspace spanned by the columns of the predictor matrix \(X\). While powerful, OLS is sensitive to outliers, as squaring the residuals gives large errors a disproportionately large influence on the final fit.

Type

Disruption

Usage

Precursors

- Linear algebra (matrix operations)

- Differential calculus (for finding minima)

- Theory of errors in observation (developed by astronomers)

- Analytic geometry (Descartes)

Applications

- parameter estimation in linear regression models

- signal processing and digital filtering

- control theory for system identification

- econometrics for modeling economic relationships

- astronomical calculations of orbits

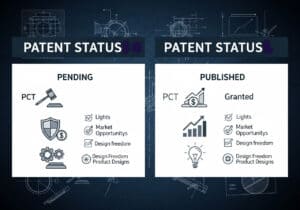

Patents:

Potential Innovations Ideas

Professionals (100% free) Membership Required

You must be a Professionals (100% free) member to access this content.

AVAILABLE FOR NEW CHALLENGES

Mechanical Engineer, Project, Process Engineering or R&D Manager

Available for a new challenge on short notice.

Contact me on LinkedIn

Plastic metal electronics integration, Design-to-cost, GMP, Ergonomics, Medium to high-volume devices & consumables, Lean Manufacturing, Regulated industries, CE & FDA, CAD, Solidworks, Lean Sigma Black Belt, medical ISO 13485

We are looking for a new sponsor

Your company or institution is into technique, science or research ?

> send us a message <

Receive all new articles

Free, no spam, email not distributed nor resold

or you can get your full membership -for free- to access all restricted content >here<

Related Invention, Innovation & Technical Principles