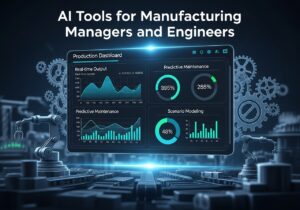

Les outils d'IA en ligne transforment rapidement l'ingénierie mécanique en augmentant les capacités humaines en matière de conception, d'analyse, de fabrication et de maintenance. Ces systèmes d'IA peuvent traiter de grandes quantités de données, identifier des modèles complexes et générer de nouvelles solutions beaucoup plus rapidement que les méthodes traditionnelles. Par exemple, l'IA peut vous aider à optimiser les conceptions en termes de performances et de fabricabilité, à accélérer les simulations complexes, à prédire les propriétés des matériaux et à automatiser un large éventail de tâches analytiques.

Les invites fournies ci-dessous aideront par exemple à la conception générative, à l'accélération des simulations (FEA/CFD), à la maintenance prédictive où l'IA analyse les données des capteurs des machines pour prévoir les défaillances potentielles, ce qui permet un entretien proactif et minimise les temps d'arrêt, à la sélection des matériaux et à bien d'autres choses encore.

Conceptual Design and Brainstorming

[prompt_formatter title=”Novel Mechanism Concept Generation” description=”Proposes various mechanical concepts to achieve a specific motion or task, expanding the engineer’s solution space. It details the operating principles, advantages, and disadvantages of each proposed mechanism.” temperature=”0.8″ thinking=”high”]## TASK DESCRIPTION⸻Generate novel mechanical concepts to achieve the specified motion or task. Provide detailed operating principles, advantages, and disadvantages for each concept.⸻⸻## INPUT⸻1. **Specific Motion or Task**: the motion or task that the mechanism needs to achieve:{specific_motion_or_task}.⸻2. **Constraints and Requirements**: the constraints or requirements that must be considered: {constraints_and_requirements}.⸻⸻## OUTPUT⸻1. **Concept List**: Generate a list of at least three novel mechanical concepts.⸻2. **Operating Principles**: For each concept, describe the operating principles in detail.⸻3. **Advantages and Disadvantages**: Provide a comprehensive list of advantages and disadvantages for each concept.⸻⸻## INSTRUCTIONS⸻1. Analyze the specified motion or task and constraints.⸻2. Generate a diverse set of mechanical concepts that could achieve the motion or task.⸻3. For each concept, detail the operating principles, highlighting how it achieves the motion or task.⸻4. List the advantages and disadvantages, considering factors such as efficiency, complexity, cost, and reliability.⸻5. Ensure the concepts are innovative and expand the solution space beyond conventional approaches.⸻⸻## NOTES⸻- Focus on creativity and feasibility.⸻- Consider interdisciplinary approaches if applicable.⸻- Use diagrams or sketches if necessary to illustrate complex concepts.[/prompt_formatter]

[prompt_formatter title=”Biomimicry for Engineering Design” description=”Identifies biological systems that have solved a similar engineering problem, providing inspiration from nature for innovative designs. It explains the natural mechanism and how it can be adapted for a technical application.” temperature=”0.7″ thinking=”high”]## TASK OVERVIEW⸻Identify biological systems that have effectively addressed engineering challenges similar to {your_engineering_problem}. Provide insights into how these natural mechanisms function and propose adaptations for technical applications.⸻⸻## INPUT REQUIREMENTS⸻1. Define the specific engineering problem: {your_engineering_problem}.⸻2. Specify any constraints or requirements for the solution: {constraints_and_requirements}.⸻⸻## OUTPUT STRUCTURE⸻1. **Biological System Identification**⸻ – Identify and describe biological systems that have solved similar problems.⸻ – Explain the natural mechanisms involved.⸻⸻2. **Mechanism Analysis**⸻ – Analyze the efficiency and effectiveness of these mechanisms.⸻ – Discuss the environmental conditions under which they operate.⸻⸻3. **Adaptation Proposal**⸻ – Propose how these natural mechanisms can be adapted for the engineering challenge given as input.⸻ – Suggest potential materials, structures, or processes inspired by these systems.⸻⸻4. **Feasibility Assessment**⸻ – Evaluate the feasibility of implementing the proposed adaptations.⸻ – Consider technical, economic, and environmental factors.⸻⸻## ADDITIONAL INSTRUCTIONS⸻- Use scientific terminology and provide references to relevant studies or biological research.⸻- Ensure clarity and precision in the explanation of mechanisms and adaptations.⸻- Highlight innovative aspects of the proposed solutions.[/prompt_formatter]

[prompt_formatter title=”Product Design Specification (PDS) Outline” description=”Generates a comprehensive template for a Product Design Specification (PDS) document. This ensures all key requirements, such as performance metrics, material constraints, and safety standards, are defined at the start of a project.” temperature=”0.3″ thinking=”medium”]# PRODUCT DESIGN SPECIFICATION (PDS) OUTLINE TEMPLATE GENERATION⸻⸻## OBJECTIVE⸻Generate a detailed Product Design Specification (PDS) template to define all key project requirements, including performance metrics, material constraints, and safety standards.⸻⸻## INSTRUCTIONS⸻1. **PROJECT OVERVIEW**⸻ – Provide a brief description of the project, including its purpose and scope.⸻ – Define the target market and user needs.⸻⸻2. **PERFORMANCE METRICS**⸻ – List all critical performance metrics that the product must achieve.⸻ – Include quantitative targets and methods for measurement.⸻⸻3. **MATERIAL CONSTRAINTS**⸻ – Specify any material requirements or restrictions.⸻ – Consider factors such as durability, cost, and environmental impact.⸻⸻4. **SAFETY STANDARDS**⸻ – Identify relevant safety standards and regulations.⸻ – Outline compliance requirements and testing procedures.⸻⸻5. **FUNCTIONAL REQUIREMENTS**⸻ – Detail the essential functions the product must perform.⸻ – Include user interface and usability considerations.⸻⸻6. **ENVIRONMENTAL CONSIDERATIONS**⸻ – Address environmental impact and sustainability goals.⸻ – Include lifecycle analysis and end-of-life disposal plans.⸻⸻7. **COST AND BUDGET CONSTRAINTS**⸻ – Define budget limitations and cost targets.⸻ – Consider production, maintenance, and operational costs.⸻⸻8. **TIMELINE AND MILESTONES**⸻ – Establish a project timeline with key milestones.⸻ – Include deadlines for each phase of the project.⸻⸻## OUTPUT FORMAT⸻Provide the PDS template in a structured format, ready for customization with project-specific details.⸻⸻## USER INPUT⸻Replace placeholders with specific project information where applicable.⸻⸻## ADDITIONAL NOTES⸻Ensure the template is adaptable to various types of products and industries.[/prompt_formatter]

[prompt_formatter title=”System Architecture Brainstorming” description=”Lays out several high-level system architectures for a complex product with given subsystems, showing different ways to arrange subsystems. Generate diagrams in Mermaid format if necessary. This helps in comparing trade-offs between modular, integrated, and other design philosophies early on.” temperature=”0.7″ thinking=”high”]**TASK OVERVIEW**⸻Generate high-level system architectures for a complex product using the provided subsystems. Explore various arrangements to compare trade-offs between modular, integrated, and other design philosophies. Use Mermaid format for diagram generation if necessary.⸻⸻**INPUT REQUIREMENTS**⸻1. List of subsystems: {list_of_subsystems}⸻2. Design philosophies to explore: {design_philosophies}⸻3. Specific constraints or requirements: {constraints_requirements}⸻⸻**OUTPUT**⸻1. Multiple high-level system architectures showcasing different arrangements of the given subsystems.⸻2. Diagrams in Mermaid format for each architecture, if applicable.⸻3. Analysis of trade-offs for each design philosophy, focusing on modularity, integration, and other specified philosophies.⸻⸻**PROCESS**⸻1. Analyze the list of subsystems and identify potential interactions and dependencies.⸻2. For each design philosophy, create a high-level architecture that arranges the subsystems accordingly.⸻3. Generate diagrams in Mermaid format to visually represent each architecture.⸻4. Evaluate the trade-offs of each architecture, considering factors such as scalability, flexibility, complexity, and performance.⸻5. Summarize findings and highlight key differences between the architectures.⸻⸻**MERMAID DIAGRAM TEMPLATE**⸻“`mermaid⸻graph TD⸻A[Subsystem A] –> B[Subsystem B]⸻B –> C[Subsystem C]⸻“`⸻⸻**ADDITIONAL NOTES**⸻Ensure that all architectures adhere to the specified constraints and requirements. Adjust the level of detail in the diagrams based on the complexity of the system and the needs of the analysis.[/prompt_formatter]

[prompt_formatter title=”Brainstorming Solutions for a Design Flaw” description=”Generates a list of creative and practical solutions to address a specific, identified design flaw. This accelerates the problem-solving process by providing a wide range of potential fixes.” temperature=”0.8″ thinking=”high”]**TASK OVERVIEW**⸻Identify and address a specific design flaw by generating a comprehensive list of creative and practical solutions.⸻⸻**INPUT REQUIREMENTS**⸻1. Description of the design flaw: {design_flaw_description}⸻2. Context...

You have read 14% of the article. The rest is for our community. Already a member? Se connecter

(et aussi pour protéger notre contenu original contre les robots d'indexation)

Communauté mondiale de l'innovation

Se connecter ou s'inscrire (100% gratuit)

Voir la suite de cet article et tous les contenus et outils réservés aux membres.

Uniquement de vrais ingénieurs, fabricants, concepteurs et professionnels du marketing.

Pas de bot, pas de hater, pas de spammer.

2 réflexions sur “Best 25+ AI Prompts for Mechanical Engineering”

Sommes-nous en train de supposer que l'IA peut toujours générer les meilleurs messages en génie mécanique ? Comment sont-elles générées ?

L'IA va-t-elle rendre les ingénieurs humains superflus ?